Your product pages are where browsers become buyers, but how do you know if they’re truly optimized? A/B testing removes the guesswork from eCommerce optimization by letting real customer behavior guide your decisions. Instead of redesigning your entire site based on hunches, you can test specific elements, measure what works, and implement changes that genuinely boost conversions. These five A/B testing strategies are simple to execute, grounded in conversion rate optimization (CRO) principles, and proven to increase sales across eCommerce stores of all sizes.

Why A/B Testing Matters for Product Page Performance

A/B testing, also called split testing, compares two versions of a page element to determine which performs better. By showing variant A to half your visitors and variant B to the other half, you collect concrete data on what drives more conversions.

For eCommerce sellers, this approach eliminates costly assumptions. Rather than implementing design changes that might decrease conversions, you validate every decision with real user data. A/B testing is particularly valuable because it allows you to optimize based on actual customer behavior rather than industry best practices that may not apply to your specific audience.

Key Performance Indicators to Track

Monitor these metrics when running product page A/B tests:

- Conversion rate: Percentage of visitors who complete a purchase

- Add-to-cart rate: How many visitors add the product to their cart

- Bounce rate: Percentage of visitors who leave without interaction

- Average order value: Revenue per transaction

- Time on page: How long visitors engage with your content

Understanding these KPIs helps you interpret test results accurately and identify which changes create meaningful business impact.

5 A/B Test Ideas for Higher Conversions

1. Test Different Product Titles

What to Test: Your product title is often the first element visitors read. Test keyword-focused titles against benefit-driven alternatives:

- Variant A: “Wireless Bluetooth Headphones, 40H Battery Life”

- Variant B: “Never Miss a Beat: Premium Wireless Headphones with 40-Hour Playtime”

Why It Matters: Product titles serve dual purposes: they need to rank in search results while also compelling visitors to keep reading. Keyword-heavy titles may perform better in search, but benefit-focused titles can increase engagement once visitors land on your page.

Some audiences respond better to technical specifications, while others prefer emotional benefits. Testing reveals which approach resonates with your specific customers.

Tools to Use:

- VWO (Visual Website Optimizer)

- Optimizely

- Shopify apps like Neat A/B Testing or Intelligems

Expected Outcome: Retailers typically see 5-15% improvements in engagement metrics when they optimize titles. However, results vary significantly by product category. Technical products often benefit from spec-focused titles, while lifestyle products perform better with benefit-driven copy.

2. Try Alternative Product Image Sets

What to Test: Images drive purchase decisions in eCommerce. Compare these approaches:

- Variant A: Clean product shots on white backgrounds

- Variant B: Lifestyle images showing the product in use

- Variant C: Mixed approach with technical shots first, lifestyle images below

Why It Matters: Visual content creates emotional connections that text alone cannot achieve. Research consistently shows that product images significantly impact conversion rates because they help customers visualize ownership and usage.

White background images communicate professionalism and let customers focus on product details. Lifestyle images help shoppers imagine themselves using the product and create aspirational appeal. The optimal approach depends on your product type and target audience.

Fashion and home goods typically perform better with lifestyle imagery, while electronics and tools may benefit from detailed technical shots that showcase features and build specifications.

Tools to Use:

Expected Outcome: Image optimization tests frequently produce 10-30% conversion rate improvements. The impact is particularly strong for products where visualization matters, such as apparel, furniture, and beauty products.

3. Experiment with Price Display Formats

What to Test: Price presentation influences purchase psychology. Test these variations:

- Variant A: Standard format: “$49.99”

- Variant B: Threshold framing: “Under $50”

- Variant C: Strikethrough comparison: “$79.99 $49.99”

- Variant D: Payment plans: “$16.66/month for 3 months”

Why It Matters: Pricing psychology affects how customers perceive value. The same price presented differently can significantly impact conversion rates because of cognitive biases in how humans process numerical information.

Strikethrough pricing leverages the anchoring effect, making the current price seem more attractive by comparison. Threshold framing (“Under $50”) minimizes price objections by emphasizing affordability. Payment plans reduce perceived cost by breaking large amounts into smaller chunks.

Tools to Use:

Expected Outcome: Price presentation tests can yield 5-20% conversion rate improvements. The most effective format depends on your price point, target market, and competitive landscape. Premium products often benefit from strikethrough pricing that justifies the cost, while budget items may perform better with threshold framing.

4. Change the Placement and Style of Reviews

What to Test: Customer reviews build trust and influence purchase decisions, but their placement affects impact. Test these configurations:

- Variant A: Reviews appear below product description

- Variant B: Star ratings displayed prominently near product title

- Variant C: Featured review snippets above the fold

- Variant D: Reviews integrated throughout the page near relevant features

Why It Matters: Social proof reduces purchase anxiety, particularly for first-time buyers. The timing and prominence of reviews affects their persuasive power. Reviews displayed early in the page experience can accelerate buying decisions, while detailed reviews lower down help customers conducting thorough research.

Many stores hide reviews below the fold, forcing visitors to scroll extensively before accessing this crucial trust signal. Moving reviews higher on the page ensures more visitors see social proof during their initial evaluation.

Consider testing review formatting as well. Verified purchase badges, helpful vote counts, and photo reviews all increase credibility beyond simple star ratings.

Tools to Use:

- Optimizely

- AB Tasty

- Yotpo (includes built-in review optimization features)

Expected Outcome: Review placement optimization typically increases conversions by 10-25%. Products with strong reviews benefit most from prominent placement, while items with mixed reviews may perform better with strategic positioning that emphasizes positive feedback.

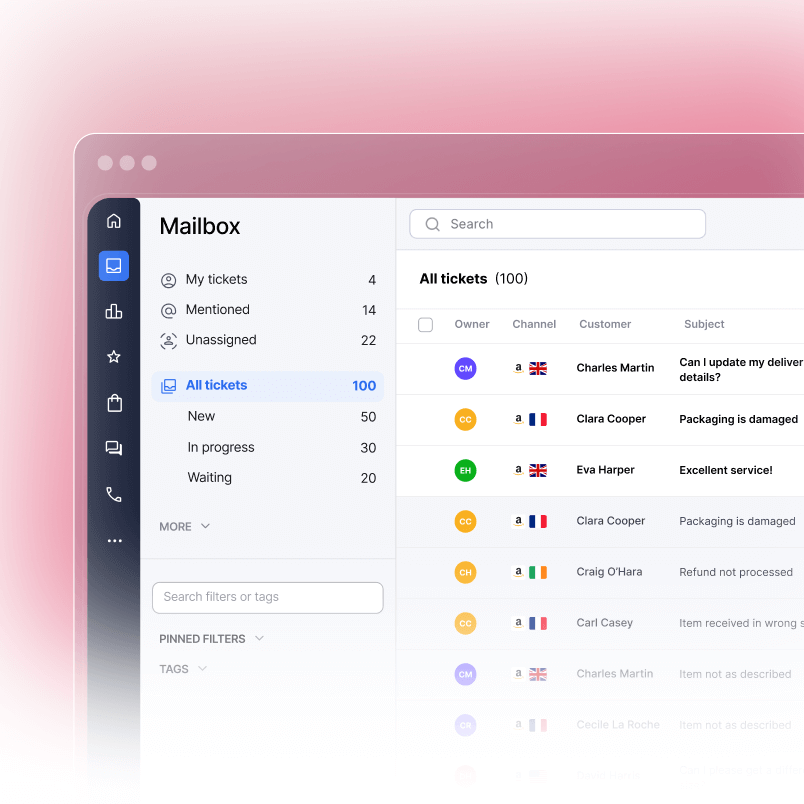

For help managing customer feedback across all your sales channels, explore eDesk’s customer view feature to centralize reviews and support interactions.

5. Compare CTA Button Colors and Wording

What to Test: Your call-to-action button is the final step before purchase. Test these elements:

Button Copy:

- Variant A: “Add to Cart”

- Variant B: “Buy Now”

- Variant C: “Get Yours Today”

- Variant D: “Start Your Order”

Button Color:

- Test high-contrast colors against your site’s primary palette

- Consider emotional associations (green for go, orange for urgency)

Why It Matters: CTA buttons trigger action. The words you choose and how prominently the button stands out both influence whether visitors click. “Add to Cart” suggests a non-committal action that visitors can reverse later, reducing purchase anxiety. “Buy Now” creates urgency but may increase hesitation for undecided shoppers.

Button color affects visibility and emotional response. The button must stand out from surrounding elements while feeling cohesive with your overall design. High-contrast combinations increase click-through rates, but the specific color matters less than ensuring the button is immediately visible.

Tools to Use:

Expected Outcome: CTA optimization tests commonly produce 5-15% conversion improvements. The impact is highest when your current button is difficult to locate or uses passive language that doesn’t encourage action.

Consider testing button size and placement alongside color and copy. A button that’s too small or positioned awkwardly can suppress conversions regardless of color or wording.

Tools to Run and Analyze A/B Tests

Selecting the right testing platform depends on your technical capabilities, budget, and eCommerce platform. Here are the top tools for product page optimization:

VWO (Visual Website Optimizer)

VWO offers comprehensive A/B testing with advanced targeting options, heatmaps, and visitor recordings. The platform works across all major eCommerce platforms and includes robust statistical analysis. Pricing starts around $200/month for small businesses, making it suitable for growing stores with dedicated optimization budgets. The visual editor requires no coding, and you can create tests in minutes while tracking results through an intuitive dashboard.

Optimizely

Enterprise-focused testing platform with powerful personalization features. Optimizely excels at complex, multi-variant tests and provides detailed analytics dashboards. The platform requires significant investment, typically $50,000+ annually, making it appropriate for large retailers running extensive optimization programs.

AB Tasty

Mid-market solution offering A/B testing, personalization, and feature management. AB Tasty provides a user-friendly interface with strong analytics capabilities and integrates seamlessly with popular eCommerce platforms. Pricing typically ranges from $500-2,000/month depending on traffic volume and features.

Shopify A/B Testing Apps

For Shopify merchants, native apps like Neat A/B Testing and Intelligems integrate directly with your theme. These tools simplify test creation and automatically calculate statistical significance. Pricing ranges from $30-300/month depending on features and traffic volume.

Intelligems

Purpose-built for eCommerce, Intelligems offers advanced testing capabilities including price optimization, shipping threshold tests, and promotion experiments. The platform emphasizes revenue impact over simple conversion metrics, helping you understand the financial implications of each change.

When selecting a tool, prioritize statistical reliability over feature quantity. A simple platform that produces accurate results beats a complex tool that overwhelms your team or generates questionable data.

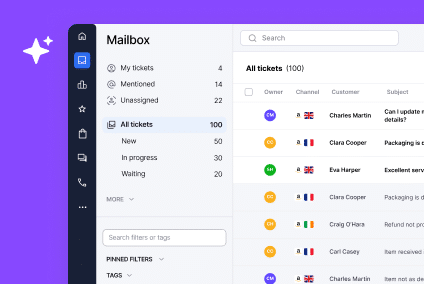

For merchants running tests across multiple marketplaces like Amazon, eBay, and Shopify, managing customer support consistently becomes crucial. eDesk’s smart inbox centralizes all customer communications, ensuring you can deliver excellent service while optimizing your product pages.

Example: A/B Test Result Breakdown

Here’s a real-world example of how A/B testing impacts product page performance:

Test Objective: Increase add-to-cart rate for wireless earbuds Element Tested: Product image style Duration: 14 days Traffic: 5,000 visitors per variant

| Metric | Variant A (White Background) | Variant B (Lifestyle Images) | Improvement |

| Add-to-Cart Rate | 12.4% | 16.8% | +35.5% |

| Conversion Rate | 4.2% | 5.7% | +35.7% |

| Bounce Rate | 48% | 41% | -14.6% |

| Average Order Value | $87 | $94 | +8.0% |

| Revenue per Visitor | $3.65 | $5.36 | +46.8% |

Winner: Variant B (Lifestyle Images)

Analysis: The lifestyle images helped visitors visualize themselves using the product, resulting in significantly higher engagement and conversion. The improvement in average order value suggests that the lifestyle images also increased cross-sell effectiveness, as customers purchased additional accessories when they could see the complete product ecosystem.

Implementation: After confirming statistical significance (95% confidence level), the store permanently implemented lifestyle images as the primary product shots, with technical specifications shown in secondary image positions. This single change increased monthly revenue by approximately $18,000 for this product line alone.

This example demonstrates why A/B testing produces better results than making changes based on assumptions. While conventional wisdom might suggest that clean product shots perform best, real customer data revealed a different preference for this specific audience.

Best Practices for Running Successful A/B Tests

Establish Clear Hypotheses

Before launching any test, document what you’re testing and why. A strong hypothesis follows this format: “Changing [element] will increase [metric] because [reasoning based on user psychology or past data].”

Example: “Changing the CTA button from ‘Add to Cart’ to ‘Buy Now’ will increase conversion rate because it creates more urgency and reduces decision-making friction.”

Test One Element at a Time

While multivariate testing has its place, start with simple A/B tests that change a single element. This approach ensures you know exactly what caused any performance differences. Testing multiple elements simultaneously makes it impossible to determine which change drove results.

Run Tests Long Enough to Reach Statistical Significance

Most testing platforms calculate statistical significance automatically, but understand what this means. Generally, aim for:

- At least 1,000 conversions per variant

- Minimum 95% statistical confidence

- Two full weeks to account for weekly traffic patterns

- Coverage of different traffic sources and customer segments

Ending tests prematurely leads to false conclusions. Early results often show dramatic differences that disappear once you collect sufficient data.

Consider Seasonal Variations

Holiday shopping behavior differs from typical patterns. If possible, avoid running critical tests during major shopping events unless you’re specifically optimizing for those periods. Black Friday test results may not apply to February traffic.

Document Everything

Maintain detailed records of every test, including:

- Test hypothesis and reasoning

- Variants tested

- Date range and sample size

- Results and statistical significance

- Implementation decisions

- Lessons learned

This documentation prevents repeating failed experiments and helps new team members understand your optimization history.

Managing customer interactions effectively becomes even more important as you optimize your product pages and drive more traffic to your store. Tools like eDesk help ensure you can handle increased support volume without compromising service quality.

Common A/B Testing Mistakes to Avoid

Declaring Winners Too Early

Sample size and test duration matter more than dramatic early results. A variant that’s winning after 100 conversions may lose after 1,000. Resist the temptation to end tests prematurely, even when results look promising.

Ignoring Mobile vs. Desktop Performance

A change that improves desktop conversions may hurt mobile performance. Always segment results by device type and consider running device-specific tests for elements that display differently across screen sizes.

Testing Too Many Elements Simultaneously

Complex multivariate tests require exponentially more traffic to reach significance. Stick with simple A/B tests unless you have massive traffic volumes (typically 100,000+ monthly visitors).

Forgetting About Returning Customers

Most testing tools show tests to all visitors equally, but returning customers may respond differently than first-time browsers. Consider segmenting results by customer status and creating separate experiences if performance differs significantly.

Optimizing for the Wrong Metrics

Increasing click-through rates matters little if it doesn’t improve conversions or revenue. Always tie tests back to business objectives like revenue, profit margin, or customer lifetime value, not vanity metrics like engagement.

Scaling Your A/B Testing Program

Once you’ve completed a few successful tests, consider expanding your optimization efforts:

- Create a Testing Roadmap: Document potential tests ranked by expected impact and implementation difficulty. Prioritize high-impact, low-effort tests first.

- Establish a Testing Cadence: Run at least one test per month to maintain optimization momentum. More frequent testing accelerates learning but requires adequate traffic to reach significance.

- Form a Cross-Functional Team: Involve marketing, design, development, and customer service in your testing program. Each team brings valuable perspectives on what to test and how to interpret results.

- Build a Testing Library: Categorize winning tests by improvement magnitude. Small wins (2-5% lift) compound over time, while breakthrough tests (20%+ improvement) justify additional investment in optimization.

- Extend Testing Beyond Product Pages: Apply A/B testing principles to checkout flows, email campaigns, and customer support workflows to create cohesive optimization across your entire customer experience.

As your eCommerce business grows, optimizing both your product pages and customer support processes ensures you can scale efficiently. Book a demo with eDesk to see how our AI-powered platform helps growing merchants manage support across all sales channels while you focus on conversion optimization.

FAQs

How long should I run an A/B test?

Run tests for at least two full weeks to account for weekly traffic patterns and ensure you collect data from different customer segments. Additionally, aim for at least 1,000 conversions per variant and 95% statistical confidence before declaring a winner. High-traffic sites may reach significance faster, while smaller stores need longer test durations to gather sufficient data.

What’s a good conversion rate for a product page?

Average eCommerce conversion rates range from 2-3%, but this varies dramatically by industry, price point, and traffic source. Fashion retailers typically see 1-2% conversion rates, while niche specialty stores may convert at 5-10%. Focus on improving your own baseline rather than comparing against industry averages, as numerous factors affect conversion performance.

Do I need coding skills to A/B test on Shopify?

No. Most Shopify A/B testing apps include visual editors that let you modify elements without code. Tools like Neat A/B Testing and Intelligems work directly within the Shopify dashboard and require only point-and-click configuration. However, advanced tests involving complex functionality may benefit from developer assistance.

How many variants should I test at once?

Start with simple A/B tests comparing two variants. Multivariate tests (comparing three or more variations) require significantly more traffic to reach statistical significance. As a rule, multiply your required sample size by the number of variants. If you need 1,000 conversions for an A/B test, a four-variant test would need 4,000 conversions to produce reliable results.

What if my A/B test shows no significant difference?

Not every test produces a winner, and that’s valuable information. A null result means your current version performs adequately, allowing you to focus optimization efforts elsewhere. Document the test, move on to your next hypothesis, and remember that learning what doesn’t work prevents wasting resources on ineffective changes.

Can I run multiple A/B tests simultaneously?

Yes, but ensure tests don’t overlap or affect the same elements. Running simultaneous tests on separate page elements (such as title and CTA button) is generally safe if you account for potential interaction effects. However, avoid running multiple tests that modify the same element or depend on each other’s outcomes, as this makes results difficult to interpret.

How do A/B testing results apply to marketplace listings?

Most marketplaces like Amazon and eBay don’t support A/B testing directly, but you can apply learnings from your website tests to optimize marketplace listings. Focus on testable elements like image order, title structure, and description formatting. Track performance over time as you make changes, though you won’t achieve the same statistical rigor as controlled website tests.

Should I test the same elements on mobile and desktop separately?

Absolutely. Mobile and desktop users exhibit different behaviors and face different constraints. A change that improves desktop conversions may hurt mobile performance due to screen size limitations or touch interface considerations. Segment your results by device type and consider creating device-specific tests for elements that display very differently across platforms.

Start Optimizing Your Product Pages Today

A/B testing transforms guesswork into data-driven decision-making, helping you systematically improve product page performance. Start with one of the five test ideas outlined above, commit to running it properly until reaching statistical significance, and implement winning variations permanently.

Remember that optimization is a continuous process, not a one-time project. Each test teaches you more about your customers and reveals new opportunities for improvement. The retailers who succeed with A/B testing maintain consistent testing programs, document their learnings, and gradually compound small improvements into significant competitive advantages.

As you optimize your product pages, ensure your customer support infrastructure can handle increased order volume. Try eDesk free to centralize support across all your sales channels and deliver exceptional service that converts browsers into loyal customers.